- Expanding the data sources to cover a greater number of countries

- Factoring in the grid connection costs, a major obstacle for renewables projects but which do not form a part of the LCOE

- Allowing a different calculation of the LCOE

The Manipulative Gerbil: Playing around with Energy Data

Following two years in the Netherlands, I moved to Edmonton, Alberta in September 2020. About a year later, my family and I relocated to Metro Vancouver--being able to take in the ocean breeze is really important! I might not have much time, but if I did I would make music (with an oud), ride my bicycle and go fishing (ideally at the same time).

Sunday, 7 July 2024

Visualising NREL's Annual Technology Baseline ("ATB") data for wind and solar energy using R

Wednesday, 30 November 2022

Wind data in R, part I: more than one way to peel a potato

- A minimal introduction to wind power

- A quick attempt at downloading the data from NASA POWER, my favourite data source, and calculating the wind energy output

- An attempt to understand how bReeze would do it , and to compare the results with my reverse engineering approach

- A generalist round-up on wind power

Some introductory remarks on wind power

If you think about it, wind power is really just solar power in another form: since solar energy is unevenly distributed across the globe, we have an unequal distribution of thermal energy. This in turn drives the transfer of heat from one part of the earth to another, something we call wind. The earth's rotation about its axis is another helping factor here, but in a nutshell this is what happens. So how does a wind turbine turn that into electricity?If you have a volume of air of moving through the "swept area" (i.e., the area of its rotor blades as they rotate) of a wind turbine, let's call it A and that wind has a density ρ while moving at a speed v , then it's quick enough to calculate how much kinetic energy that wind flow is carrying:

The factor of 50%, as well as the cubic term in wind speed is a leftover from how kinetic energy is defined in physics. Essentially, this is energy which the wind has just by virtue of moving, and we can use it to make a turbine go around. Importantly, if you want the wind to keep flowing even after you extract some of it to drive a wind turbine, then you will eventually hit the "Betz Limit"--Wikipedia actually has a fairly good treatment of the calculus behind this--which is roughly ~50% of the wind energy. This is important because the design of wind turbines takes this limit into account--the wind turbines used for electricity will work under a specific regime of conditions.

For our purposes, we are only interested in a few salient facts about any given wind turbine. First, we want to know what the height of the hub (location of the nozzle) of the turbine is. Since a wind turbine might typically have a hub height at 100 m or more, a practical way of relating the wind speed at the hub height to the wind speed as measured at something more reasonable--say, 10 m elevation--is needed. The way to do this is quick and easy although it depends on the surrounding terrain:

Here, \alpha is the "Hellman exponent" where the turbine is located. Below, I use a simplified approach and make it 1/7 for land-based and 1/9 for offshore wind turbines. Note that the Hellman exponent is dependent on the "surface roughness" based on the location of the turbine. Most people would probably do the calculation using the surface roughness, but I am just trying to simplify this function. One important thing to remember is that the increase in wind speed as you go higher happens more rapidly in areas with jagged features--like a city--than in areas which are flat. Next, we want to know how much electricity is generated by a wind turbine if we know the location-specific wind speed at a given time. Wind turbine manufacturers provide this data in what is called the "power curve" of the wind turbine. Here's what the power curve of the Enercon E126 looks like.

Figure 1:

Before moving on to the section where I talk about using NASA POWER data to model what the output of the Enercon E126 would be, I just wanted to tie in our original equation above which quantifies the amount of kinetic energy of the wind and the electricity generated by a turbine. Let's take the same wind speeds from the power curve, and plug them into the expression for the kinetic energy in the wind. We can calculate the ratio of electricity output from the wind turbines

Our little attempt: hourly wind speeds

The first thing I'm going to do here is to get an idea of what the wind turbine we are looking at will do. After installing and loading "bReeze", I am going to find the power curve associated with the Enercon E126 wind turbine. This is a turbine which has a hub height of 135 m. It has a "cut-in" wind speed, below which it does not generate electricity, of 3 m/s and a "cut-out" wind speed, higher than which it is turned off, of 25 m/S.Incidentally, this is what the lovely Enercon E-126 looks like.

#We want the correct power curve here pow_curve = bReeze::pc("Enercon_E126_7.5MW.pow") pow_curve_df = data.frame(cbind(pow_curve$v, pow_curve$P)) colnames(pow_curve_df) = c("speed", "power")

In the next code block, I write a quick function which takes in a set of parameters: windspeed; the cut-in and cut-out windspeeds; the surface roughness at the location; the hub height; and a power curve. The returned value will be a vector containing the power output of the wind turbine expressed in kW.

calculate_wind_outputs <-function(windspeeds, measured_height, hub_height, power_curve, cutin_speed, cutout_speed, terrain) { #First, we calculate what the wind speed would be at the hub height of the given turbine #We can use a simple linear adjustment and just increase the speeds by the correct amount if(terrain == "onshore") { hellman_exponent = 1/7 } else if(terrain == "offshore") { hellman_exponent = 1/9 } adjustment_factor = (hub_height/measured_height)^hellman_exponent adjusted_wind_speeds = windspeeds*adjustment_factor #Let's create a vector which has the same length as the wind speeds power_output = adjusted_wind_speeds for(i in 1:length(power_output)) { if(adjusted_wind_speeds[i] > cutin_speed & adjusted_wind_speeds[i] < cutout_speed) { for(j in 1:nrow(power_curve)) { #Just make sure that this is working if(adjusted_wind_speeds[i] >= power_curve$speed[j] & adjusted_wind_speeds[i] < power_curve$speed[j+1]) { #We want the power output to match the power curve so long as the wind speed is the same as or greater than # the power curve's predicting variable and below the one above power_output[i] = power_curve$power[j] } } } #You get no power output if your wind speed is either below the cut-in or above the cut-out speed else if(adjusted_wind_speeds[i] < cutin_speed | adjusted_wind_speeds[i] >= cutout_speed) { power_output[i] = 0 } } #In keeping with good practice--a function should return only one variable return(power_output) }

How do I know that this worked? If you take the same default values from the power curve, plug them into our function, and then plot the result, you should get the same plot as in Figure 1. A quick way to do that is shown below.

Another thing worth looking into is the power coefficient of the wind. This is defined as the ratio of "energy in the wind/electric energy generated by turbine" and in the next code block, I plot this value. You should find that the maximum value is just below the Betz Limit and coincides with a wind speed which is right at the plateau of the "rated power" of the wind turbine. An important side note--you can generally find the diameter of a wind turbine by looking up the manufacturer's website, like I did. You might want to look out for the fact that wind turbines from the same manufacturer sometimes have confusingly similar names and also slightly different specifications (for whatever reason, this seems to happen when a single manufacturer markets products to both the North American and European markets). In this case, it shouldn't be an issue.

#The power coefficient of the wind is the ratio of # wind power electricity to the power in the wind area_of_turbine = pi*(0.5*127)^2 wind_sppeds = pow_curve_df$speed density_of_air = 1.225 #Keep in mind that the expression for kinetic energy was in J, not kWh #So we first multiply by 1/1000 #Notice though that we are assuming both that the energy is constant #throughout the hour and therefore that the wind turbine keeps going kinetic_energy_in_wind = 0.001*0.5*area_of_turbine*density_of_air*wind_sppeds^3 power_coefficient = pow_curve_df$power/kinetic_energy_in_wind

Something to notice is that the maximum power coefficient (~50%) comes in at around 10 m/s (wind speed at hub height), which is when the turbine is only generating 3.75 MW of power, or about 50% of its capacity. The thing to note here is where the sweet spot of 100% capacity kicks in, which for our case is between 16 m/s and 25 m/s. I will explain a little more about this in the final section of this blog post.

Finally, let's use some real-world data and our function to give us a sort of back-of-the-envelope calculation of what electricity generated from a single E-126 turbine would look like. I wanted to make the next bit comparable to the default values in the bReeze user guide, so I borrowed some coordinates from roughly the area where the developers of that package live. These are used below to download a year's worth of data.

Note: my operating assumption below is that the wind data which comes bundled with bReeze is from Neubuerg in Germany, and hence the choice of coordinates. You might also want to look up more on nasapower , my favourite of all time R package.

#The first thing we do is to download the hourly wind data from NASA POWER #We want to get the wind speeds at both 10m and 50m hourly_wind_data = nasapower::get_power(community = "re", pars = c("WS10M", "WS50M"), temporal_api = "HOURLY", lonlat = c(11.1421496, 48.7716291), dates = c("2008-12-31", "2010-01-01")) #As usual, we want to make sure we have a dataframe, which is the easiest way to work with this kind of thing in R #The original data download is a tibble, with some metadata which we can discard here hourly_wind_data = data.frame(hourly_wind_data) #Put it through the works for the Enercon E-126 #I'm going to use the windspeeds from the 50m hub height, for fun #Please note that as a decent member of the human race, I only use metric/SI units if at all possible hourly_wind_energy = calculate_wind_outputs(windspeeds = hourly_wind_data$WS50M, measured_height = 50, hub_height = 135, cutin_speed = 3, cutout_speed = 25, power_curve = pow_curve_df, terrain = "onshore") #What kind of capacity factor does this give us? #Convert to MWh #Then take into account that the total possible energy generated is 7.5 MW * 8760 hours in a year capacity_factor_rough = 0.001*sum(hourly_wind_energy)/(7.5*8760)

Working through the code below, we find that the annual capacity factor for our selected turbine at our randomly selected is about 17%. Does bReeze give a much different answer?

How bReeze would do this, and a quick comparison

At its heart, bReeze is a package which does something really quite similar--it takes some data on wind speeds, defines the mast/hub height of a turbine and its power curve, and gives us values for annual power output. True to form for an R package however, it also lends itself to some statistical analyses of the wind profiles of specific locations. One thing about bReeze which makes sense when you think about it is that, being aimed at specialists, users really need to understand a lot of the moving parts of wind power before they can meaningfully use this particular R package.First, let's load some of the data bundled with bReeze and takea look at what it contains

#How to calculate it in bReeze #We already did this using our manual method but we will leave out the re-naming of the columns # and the conversion to a DF--bReeze uses these objects as they are pow_curve = bReeze::pc("Enercon_E126_7.5MW.pow") #Let's also get the wind speed data which is bunlded with bReeze data("winddata", package = "bReeze") data_from_breeze = winddata

One thing you will notice is that this data contains much more than just the wind speeds,but also the wind directions. You will see that these are given in units of degrees, which can be easily converted to cardinal directions, for example using this conversion here . Another interesting point, which will come up when we move on to the Weibull distribution (the next blog post, I hope!) is that this data comes not only with average wind speeds within a time slot, but also the standard deviation; so we know not only how windy it is, but can infer things like turbulence in the wind and other measures of variability. Lasly, the data bundled with bReeze covers 10-minute time intervals covering 270 days, from May, 2009 to the end of January, 2010. This might seem odd, but probably chimes with the real-world availability of actual windspeed measurements using ground-based equipment (which may or may not be more reliable than NASA's satellite-based readings).

#To calculate the annual energy output from bReeze, we would simply run the following code annual_energy_from_breeze = bReeze::aep(profile = our_wind_profile, hub.h = 135, pc = pow_curve) #You can see that we are keeping that hub height and power curve from last time # but we also now need to create a wind profile, shown below #Our first sub-task is going to be to extract the wind speeds and standard deviations measured at 40 m measured_40_speed = data_from_breeze$v1_40m_avg measured_40_std = data_from_breeze$v1_40m_std direction_from_breeze_set = data_from_breeze$dir1_40m_avg #We combine the average wind speed, standard deviation of that wind speed and # its direction into a "set" set40m = bReeze::set(height = 40, v.avg = measured_40_speed, v.std = measured_40_std, dir.avg = direction_from_breeze_set) #The next thing to do is to build a "mast" object, which combines a set of wind data # together with timestamps #Note that for this to work, we need to format the timestampes correctly #For the sake of completeness, I am going to give the timestaps a CET timezone timestamp_from_breeze = bReeze::timestamp(data_from_breeze$date_time, tz = "CET") mast_for_breeze = bReeze::mast(timestamp = timestamp_from_breeze, set40m) #So now we have a mast object which ties together a set of wind speed data to date time elements # We build a "wind profile" which is correctly formatted to use with our function in bReeze our_wind_profile = bReeze::windprofile(mast = mast_for_breeze, v.set = 1, dir.set = 1) #Note that I have chosen to use the first (and only) wind speed and direction sets #Now let's go back to our earlier line of code annual_energy_from_breeze = bReeze::aep(profile = our_wind_profile, hub.h = 135, pc = pow_curve) #We can now access the capacity factor--18% annual_energy_from_breeze$capacity

If you run this code, you get an increase of the capacity factor from about 17.3% to 18.6%. At first glance this might seem unimportant, but it is in fact a significant relative increase. In my next blog post, I am going to explore two different sources for this discrepancy: the actual source of the data and the statistical "Weibull distribution" which bReeze uses to model the wind speeds throughout the year. That's for a later discussion though!

Some concluding notes on choosing wind turbines

In a previous blog post , I described how to use R to graphically map the output of solar energy across specific regions, tying geography with solar insolation and electricity output. Similarly, electricity from the wind is closely tied to geography. How this ties into a specific wind turbine design is to consider where the rated power--when the output matches the "nameplate" or maximum performance of the turbine--and how that compares with the wind profile of the selected location. So if you have a location where the wind is not likely to ever be within the range of rated wind speed (when it first reaches the rated power, in our case 16 m/s at hub height) and the cut-out speed (25 m/s in our case), then you've probably not found the right wind turbine yet. At another level, you would also want to consider the expected power coefficient as well.

One really important aspect of this which I've left out is the question of wind "shading"--just like with solar panels having the potential to cast a shadow over panels in the following row, wind turbines can extract too much energy from other turbines which sit "down wind" from there, thus "shading". Additional factors also play in to the layout and siting of a wind farm, including for example the distance between turbines and the distance to a power interconnection.

I am going to leave out wind farm design optimization from this post, but you might be interested in some of the reading notes below.

Data and some reading

- The Canadian Wind Energy Atlas--although I think their geographical resolution is a bit over the top. You will need an account setup.

- ...not to be confused with the Canadian Wind Turbine Database , where you can download an Excel spreadsheet letting you know exactly how many MW of capacity are installed, and where. I really like this dataset.

- Some specialist reading on optimization of wind farm layouts

- Another specialist paper on the same topic, this time from NREL in the US

- Unless otherwise attributed, all my images are taken from sites using a Creative Commons license.

Wednesday, 12 May 2021

Follow-up: Second Outtake on Calgary RUG Meeting on 21 April

For the matter at hand, here's a second "out take" from the talk, where I explain how to create a map which labels cities across the world with dots corresponding in size to their annual solar radiation.

By the time you're done, you should have a map which looks like this one here.

Have fun!

#To get the longitude, latitude for the cities in question, you will need to install nominatim for Open Street Maps #devtools::install_github("hrbrmstr/nominatim") # You will ned an OSM API key which you can get for free from Map Quest # Then you just populate the data frame like so calgary.rug.cities = c("Calgary", "Vancouver", "Edmonton", "Yellowknife", "Winnipeg", "Utrecht", "Buenos Aires", "Ramallah", "Doha", "Berlin") calgary.rug.countries = c("CA", "CA", "CA", "CA", "CA", "NL", "AR", "PS", "QA", "DE") #You need to know the ISO country codes for the countries in question, I suppose calgary.rug.solar.df = data.frame(matrix(nrow = length(calgary.rug.cities), ncol = 2)) for(i in 1:length(calgary.rug.cities)) { place_holder = nominatim::osm_geocode(query = calgary.rug.cities[i], country_codes = calgary.rug.countries[i], key = myosmapikey) calgary.rug.solar.df[i,1] = place_holder$lon calgary.rug.solar.df[i,2] = place_holder$lat print("One more city") } #We will need 13 columns of insolation data, one for each month and one for the annual values calgary.rug.solar.monthly = data.frame(matrix(nrow = nrow(calgary.rug.solar.df), ncol = 13)) for(i in 1:nrow(calgary.rug.solar.monthly)) { request_solar_ghi = nasapower::get_power(community = "SSE", pars = "ALLSKY_SFC_SW_DWN", temporal_average = "CLIMATOLOGY", lonlat = c(calgary.rug.solar.df[i,1], calgary.rug.solar.df[i,2])) #To see how this next line works, you really have to know what the structure of the returned response from NASAPOWER calgary.rug.solar.monthly[i,] = data.frame(request_solar_ghi[which(request_solar_ghi$PARAMETER == "ALLSKY_SFC_SW_DWN"),][,4:16]) } colnames(calgary.rug.solar.monthly) = colnames(data.frame(request_solar_ghi[,4:16])) #Check this to see what it is calgary.rug.solar.monthly rownames(calgary.rug.solar.monthly) = calgary.rug.cities calgary.rug.solar.df = cbind(calgary.rug.solar.df, calgary.rug.solar.monthly$ANN) calgary.rug.solar.df row.names(calgary.rug.solar.df) = calgary.rug.cities colnames(calgary.rug.solar.df) = c("LON", "LAT", "Annual GHI") calgary.rug.solar.df #The annual value is given as a "average day," so we need to fix it calgary.rug.solar.df$`Annual GHI` = calgary.rug.solar.df$`Annual GHI`*365 calgary.rug.solar.df calgary.rug.cities.diffuse = vector(length = length(calgary.rug.cities)) for(i in 1:length(calgary.rug.cities.diffuse)) { diffuse_request = nasapower::get_power(community = "SSE", pars = "DIFF", temporal_average = "CLIMATOLOGY", lonlat = c(calgary.rug.cities.for.presenting$LON[i], calgary.rug.cities.for.presenting$LAT[i])) annual_diffuse = data.frame(diffuse_request)$ANN calgary.rug.cities.diffuse[i] = annual_diffuse } calgary.rug.cities.dnr = vector(length = length(calgary.rug.cities)) for(i in 1:length(calgary.rug.cities)) { dnr_request = nasapower::get_power(community = "SSE", pars = "DNR", temporal_average = "CLIMATOLOGY", lonlat = c(calgary.rug.cities.for.presenting$LON[i], calgary.rug.cities.for.presenting$LAT[i])) annual_dnr = data.frame(dnr_request)$ANN calgary.rug.cities.dnr[i] = annual_dnr } calgary.rug.cities.diffuse[is.na(calgary.rug.cities.diffuse)] <- 0 calgary.cities.diff_to_dnr = calgary.rug.cities.diffuse/calgary.rug.cities.dnr #Yelloknife is a special case it seems; just not a lot of sunlight in some parts of the year calgary.rug.yellowknife_diffuse1 = data.frame(nasapower::get_power(community = "SSE", pars = "DIFF", temporal_average = "CLIMATOLOGY", lonlat = c(calgary.rug.cities.for.presenting$LON[4], calgary.rug.cities.for.presenting$LAT[4]))) calgary.rug.yellowknife_diffuse = sum((calgary.rug.yellowknife_diffuse1)[,4:16], na.rm = TRUE) calgary.rug.yellowknife_dnr1 = data.frame(nasapower::get_power(community = "SSE", pars = "DNR", temporal_average = "CLIMATOLOGY", lonlat = c(calgary.rug.cities.for.presenting$LON[4], calgary.rug.cities.for.presenting$LAT[4]))) calgary.rug.yellowknife_dnr = sum(calgary.rug.yellowknife_dnr1[,4:16], na.rm = TRUE) calgary.rug.yellowknife_diff_to_dnr = calgary.rug.yellowknife_diffuse/calgary.rug.yellowknife_dnr calgary.cities.diff_to_dnr[4] = calgary.rug.yellowknife_diff_to_dnr calgary.rug.cities.for.presenting$DiffDNR = calgary.cities.diff_to_dnr #Before going any further, please have a quick check to see that the different data frames are reasonable before trying to plot them myPaletter = colorRampPalette(rev(brewer.pal(11, "RdBu"))) scaled_map = scale_color_gradientn(colors = myPaletter(25), limits = c(min(calgary.rug.solar.df$DiffDNR2), max(calgary.rug.solar.df$DiffDNR2)), name = "Diffuse-to-DNR \n %-age") #The map for diffuse to DNI ggplot2::ggplot(data = world_map) + geom_sf() + geom_point(data = calgary.rug.solar.df[1:10,], mapping = aes(x = LON, y = LAT, color = (DiffDNR2)), size = 5) + coord_sf(xlim = c(min(calgary.rug.cities.for.presenting$LON)-10, max(calgary.rug.cities.for.presenting$LON) + 10), ylim = c(min(calgary.rug.cities.for.presenting$LAT)-10, max(calgary.rug.cities.for.presenting$LAT)+10), expand = F) + scaled_map + ggtitle("Diffuse to DNR annually \n selected cities") +theme(panel.background = element_rect(fill = "lightblue", color = "blue", size = 0.5))

Thursday, 8 April 2021

What a time to be alive! Hourly solar data in R.

Hourly Data from the NASA POWER API

< /a> I absolutely love Adam Sparks' nasapower package; it's no exaggeration to say that this is definitely my favourite CRAN package (maybe nicethings is a close second, but it's not even on CRAN last I checked, so). As a wrapper for the NASA POWER API, nasapower is just brilliant. The problem is though that the API at this stage has a serious limitation on the time resolution of the data it allows you to download--at the moment, the finest temporal resolution is daily, so you can seriously miss out on some important details within the data. NASA have made clear that they will begin allowing hourly data downloads by July, and nasapower will be changing to meet the times. What if you need hourly data now, however, and are willing to take a risk with the beta version of the NASA POWER API? The Beta version of the NASA POWER API does indeed allow downloads hourly data. Although not perfect, hourly data is a big improvement. This means though that you would forget about the easy to use wrappers and instead focus on getting/scanning any dangers. In general, I found it difficult to get comprehensive guides to using APIs in R; there seemed not to be a clear and reproducible fix which always worked with all APIs. In the end, I found the answer lying on Tony Haber's blog. The answer is adapated for our needs.# Converting an API into a useable format # We will need to use the following packages library(httr) # for the GET request library(jsonlite) # for the fromJSON -- note that there are always competitors for this library(tibble) # for enframe #The first request we download is for GHI, or "ALLSKY" insolation #The second is for temperatures #The query string for the API request is based a start and end dates of 1 Aug 2009 to 1 Sep 2009, in a YYYYMMDD format #We give the longitude and latitude of Qatar as 51.25 and 25.2 (these are approximate) #and then use parameters as "ALLSKY_SFC_SW_DWN" or "T2M" as appropriate #set the URL to download hourly data response_qatar_aug_sep_2009 = GET("https://power.larc.nasa.gov/beta/api/temporal/hourly/point?start=20090801&end=20090901&latitude=25.2&longitude=51.25&community=re¶meters=ALLSKY_SFC_SW_DWN&format=json&user=dataqueryabed&header=false&time-standard=lst") response_qatar_aug_sep_2009_temperatures = GET("https://power.larc.nasa.gov/beta/api/temporal/hourly/point?start=20090801&end=20090901&latitude=25.2&longitude=51.25&community=re¶meters=T2M&format=json&user=dataqueryabed&header=false&time-standard=lst") #Let's ignore the metadata and take only the "content" content(response_qatar_aug_sep_2009, as="text") content(response_qatar_aug_sep_2009_temperatures, as = "text") #We want this not to be in JSON, but a "list" type of data # unframed_response = (fromJSON(rawToChar(response_qatar_aug_sep_2009$content))) unframed_response = (fromJSON(rawToChar(response_qatar_aug_sep_2009$content))) unframed_response_temperatures = (fromJSON(rawToChar(response_qatar_aug_sep_2009_temperatures$content))) #Following Tony El Habr's steps #framed_response = enframe(unlist(ugly_response)) framed_response = enframe(unlist(unframed_response)) framed_response_temperatures = enframe(unlist(unframed_response_temperatures)) #We now have hourly data from the NASA POWER API #We can remove the metadata from both dataframes # Look at the structure of these data frames however. The data we actually want # has a row name in the $name column of something like "properties.parameter..." etc # Let's remove the metadata rows, and let's do it with a function to make life more modular remove_metadata_from_framed <- function(data_input_framed) { rows_to_remove = vector() for(i in 1:nrow(data_input_framed)) { #Make sure you have stringr installed and called if(! str_starts(data_input_framed$name[i], "properties")) { rows_to_remove = append(rows_to_remove, i) } } framed_no_metadata = data_input_framed[-c(rows_to_remove),] return(framed_no_metadata) } #This simple function deletes rows where the row name does not # begin with "properties" qatar_august_GHI = remove_metadata_from_framed(framed_response) qatar_august_T2M = remove_metadata_from_framed(framed_response_temperatures) #A word to the wise: the units for GHI are now Wh/m^2 instead of kWh/m^2 #Just as a kind of basic check, we can confirm too that the solar insolation in Qatar #is a likely predictor for the temperatures there > summary(lm(qatar_august_T2M$value ~ qatar_august_GHI$value))$r.squared [1] 0.8927242 #You can also, eg, plot these values ... the sky's the limit

Thursday, 11 March 2021

"The Alberta Albedo Advantage", or "Why my transitions always darken in Alberta"

Before moving on to the final part of this series on solar irradiance and solar PV power across Alberta, I wanted to do a little segway and talk about how albedo is a particularly relevant issue for the workings of PV panels in Alberta and similar places. This is also in part a short elaboration/commentary on a presentation I heard as part of a webinar organised by Solar Alberta on February 25.

Let me say: if you'd rather just jump to the bit which has R codes to download the relevant data, then please just click here . If you're wondering what albedo is, please read on.

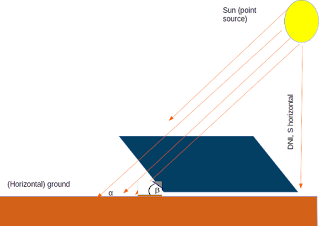

In the earlier blog post, what I did was to model the amount of solar insolation at a given site through the "Global Horizontal Irradiation," or GHI, which is a measure of the amount of solar energy that falls on a horizontally flat solar panel that is "looking up". GHI is in turn made up of two separate components. The larger of these is the component of the solar insolation which falls more or less straight down, also known as Direct Normal Irradiance or "DNI". A second component however is made up of the solar insolation which hits the panel after bouncing off of the atmosphere and, especially in the case of PV panels deployed in urban settings, of large, reflective structures.

Finally, it's worth considering also what happens when the solar PV panel is--as is typical--tilted. In that case, the total solar irradiation on the PV panel--in the "plane of array"--is the sum of three distinct components, one of which is the component which falls directly on the panel in a normal from the sun; a second component which is diffuse solar radiation from the atmosphere and which lands on the tilted panel; and a third component which is reflected off of the ground and meets the panel. Programmatically, this is just:

Gtotal = GDNI + GDIFF + Gground

A really good way to understand these different components is to read the explanations of various (competing) models of POA irradiance models on the Sandia website. For my purposes, I will use the following conversions:

- Beam irradiance, GBeam

- The beam irradiance is a product of the cosine of the tilt angle (θ) and the DNI: Gbeam = GDNI *cos(θ)

- Ground reflected component, GGround

- The ground reflected component is going to similarly be a function of the tilt angle but also an "albedo" constant, ρ ,which we can quantify for each month at each location. Note the definition of albedo right there: it's simply a two-dollar word for how much light is reflected off a particular piece of ground.

- Diffuse component, GDIFF

- Let me just refer you back to the Sandia website. I'll be frank, there are too many competing models to compute how much solar irradiance is diffuse in the atmosphere above a certain site at a given time, and I'd rather just focus on crunching out the numbers for this blog post. I will simply download the diffuse irradiance below.

Let me just point out, once again: it's important not to confuse the GHI and DNI types of irradiance. The latter is the bit which falls directly downwards on a flat solar PV panel, while the former includes other components.

Also, you're possibly wondering about the full title of this blog post. Here's a picture of my transition type glasses which I took on my Edmonton balcony the other (not very sunny) day. What could the fact of the transitions having faded to black on a not-so-sunny day got to do with solar PV electricity and its availability? Quite a lot!

Finally, before moving on to the R code, I should probably point out that we will make use of a specific way to calculate the tilt angle. As with so many things, there are multiple approaches to calculating a tilt angle, which in general factor in the latitude of a given location and a temporal factor. Depening on your desired level of exactness, this temporal factor can vary from month, day or even hour: it is meant to account for the changes in the solar azimuth angle and the location of the PV panel. You can find papers which compare multiple models for calculating the optimal tilt angle for a given location, such as this one from Palestine. For Western Canada--which we've defined here as just the provinces of British Columbia and Alberta--the latitudes range from 48.56 to 59.07, measuring roughly 1100 kms North-South. Figuring out a specific tilt angle for each location within our dataset, while more "correct," might not be a great use of time for a simple blog post.

One perfectly reasonable compromise I can find is to use the approach in Stanicu and Stanicu, which is to define the tilt angle θ as the sum of the latitude, φ and declination angle, δ : θ = φ + δ

For its part, the declination angle is a function of the day of the year, d:

δ = -23.45*cos(0.986*(d+10))

In a later part of this post, I will show how this can be coded into R.

The R Codes

From here on, I'm just going to focus on the mechanics of downloading the data and presenting it in the same way as I did last time. Once finished, I will show how albedo--due largely to snow cover--makes the question of solar insolation and, eventually, PV electricity, in Alberta more nuanced than would be thought about if all you did is look at GHI.In the next code snippet, I am going to first load all of the R packages I need and then move on from there to add names to the "Census Divisions" in Alberta.

library(rgeos) library(maptools) library(ggmap) library(ggplot2) library(sp) library(rgdal) library(raster) library(nasapower) library(scales) library(plyr) alberta_division_names = c("Medicine Hat", "Lethbridge", "Claresholm", "Hanna", "Strathmore", "Calgary", "Wainwright", "Red Deer", "Rocky Mountain House", "Lloydminster", "Edmonton", "Cold Lake", "White Court", "Hinton", "Canmore", "Fort McMurray", "Slave Lake", "Grande Cache", "Grande Prairie") #We want to be able to combine/compare these with the districts in British Columbia western_canada_province_names = western_canada_ellipsoid@data$NAME_1 western_canada_division_names = c(alberta_division_names, western_canada_ellipsoid@data$NAME_2[20:47]) western_canada_district_names = c(alberta_division_names, western_canada_ellipsoid@data$NAME_2[20:47]) western_canada_albedo_annual = cbind(western_canada_district_names, western_canada_albedo_monthly[,13])

Next, one straightforward thing is to use nasapower to download values for the diffuse solar irradiation and also the surface albedo constant. The "parameter," or keyword, which nasapower uses to define these values are "DIFF" and "SRF_ALB", and to turn these into dataframes. As always, I am using the "CLIMATOLOGY" timeframe which takes monthly averages for the period between 1984 and 2013 and the "SSE" community which specifies that the units used will be useful for the renewable energy community. In the code snippet below: I create distinct data.frame objects which cover all of the sets of data that we want. Unlike in the previous post, I am just going to treat "Western Canada" as one single unit here and from there . I download both sets of data for each site in our dataset. From there, I divide each request from the server into separate data.frame. Finally, I populate each row of the dedicated data frames with the relevant retrieved data.

One thing you'll notice is that I do two separate requests: one for the GHI alone and one for the albedo, DNI/DNR and Diffuse components combined. This is purely because the API request only allows a maximum of three parameters to be downloaded at once if you're using the "CLIMATOLOGY" timeframe. Next, I should point out that while I also downloaded GHI radiation last time, that was limited to using annual values. Later on, it will become clear why monthly data are also valuable for this blog post.

#We want four types of data on a monthly basis for each site: albedo constants as well as GHI, DNR (aka DNI) and Diffuse insolation western_canada_dnr_monthly = data.frame(matrix(ncol = 13, nrow = nrow(western_canada_coordinates))) western_canada_ghi_monthly = data.frame(matrix(ncol = 13, nrow = nrow(western_canada_coordinates))) western_canada_albedo_monthly = data.frame(matrix(ncol = 13, nrow = nrow(western_canada_coordinates))) western_canada_diffuse_monthly = data.frame(matrix(ncol = 13, nrow = nrow(western_canada_coordinates))) for(i in 1:nrow(western_canada_coordinates)) { #Get the data from NASA POWER #Make sure to use the correct parameter names from nasapower #retrieved_parameters = nasapower::get_power(community = "SSE", pars = c("DNR", "DIFF","SRF_ALB"), lonlat = c(western_canada_coordinates[i,1], #See the explanation above for why we do this with two requests western_canada_coordinates[i,2]), temporal_average = "CLIMATOLOGY") retrieved_global_horizontal = nasapower::get_power(community = "SSE", pars ="ALLSKY_SFC_SW_DWN", lonlat = c(western_canada_coordinates[i,1], western_canada_coordinates[i,2]), temporal_average = "CLIMATOLOGY") #Split the API request into separate data.frames #Notice that the original request returns a tibble, which is more information than we want/need #Once we divide it into 4 data.frames, we fill one row of each of the data.frames we want retrieved_albedo = data.frame(retrieved_parameters[which(retrieved_parameters$PARAMETER == "SRF_ALB"),]) retrieved_diffuse = data.frame(retrieved_parameters[which(retrieved_parameters$PARAMETER == "DIFF"),]) retrieved_dnr = data.frame(retrieved_parameters[which(retrieved_parameters$PARAMETER == "DNR"),]) retrieved_ghi = data.frame(retrieved_global_horizontal[which(retrieved_global_horizontal$PARAMETER == "ALLSKY_SFC_SW_DWN"),]) #Since we've set up data.frames for the information/data we need, populate each row of those two data.frames western_canada_albedo_monthly[i,] = retrieved_albedo[,4:16] western_canada_diffuse_monthly[i,] = retrieved_diffuse[,4:16] western_canada_dnr_monthly[i,] = retrieved_dnr[,4:16] western_canada_ghi_monthly[i,] = retrieved_ghi[,4:16] #Just a quick check print(paste("Ya habeebi, we are at site", i, sep = ", ")) }

We now have monthly averages for the DNR, the Albedo constant and the diffuse irradiation/insolation for each of the 47 Level 2 districts in Western Canada. As with the previous post, the data is gathered based on the longitude and latitude coordinates of the centroids of each district, using the NASA POWER package from Cran. Doubtlessly, using the centroids for each of these districts is probably introducing at least some kind of errors--you wouldn't necessarily want to place solar panels there unless you've determined that doing so is coincidentally the optimal location for solar radiation. I might re-visit this in a later blog spot, but for now this will do. One thing which I never quite liked but have found efficient is the very "tight" syntax for subsetting data.frames: your_subset = your_data_frame[which(your_data$COLUMN_NAME == "your criterion",)]. If you're confused about why I chose columns 4:16, please see the previous post.

How to compute the tilt angles per site per month? In the snippet below, I define what the "median day" per month is. I then calculate straight away what the tilt angle is for that median day.

days_in_months = c(31, 28, 31, 30, 31, 30, 31, 31, 30, 31, 30, 31) #Now we want is to know what the median number of days midpoint_of_months = ceiling(0.5*days_in_months) #Now, to find the median day of each month as out of the day cumulative_days_months = cumsum(days_in_months) median_days_of_months = vector(length = 12) median_days_of_months[1] = midpoint_of_months[1] for(i in 2:12) { #We start with the previous month and then add to it to the median number #of days from the present month median_days_of_months[i] = cumulative_days_months[i-1] + midpoint_of_months[i] }

It's important to make clear that R computes trignometric functions using radians while so far we've been assuming that it's degrees. Below, I create a simple function which turns degrees into radians and then finally returns a monthly tilt angle for each of the Level 2 sub-divisions in Western Canada.

#Make a function that takes degrees and returns radians deg_to_rad <- function(degrees) { rads_to_return = degrees*pi/180 return(rads_to_return) } #Defining the declination angles #Define the number of days per month days_in_months = c(31, 28, 31, 30, 31, 30, 31, 31, 30, 31, 30, 31) #Now we want is to know what the median number of days midpoint_of_months = ceiling(0.5*days_in_months) #Now, to find the median day of each month as out of the day cumulative_days_months = cumsum(days_in_months) median_days_of_months = vector(length = 12) median_days_of_months[1] = midpoint_of_months[1] for(i in 2:12) { #We start with the previous month and then add to it to the median number #of days from the present month median_days_of_months[i] = cumulative_days_months[i-1] + midpoint_of_months[i] } #Declination angles, change only by month declinations_monthly = vector(length = 12) for(i in 1:12) { declinations_monthly[i] = deg_to_rad(-23.45*cos(deg_to_rad(0.986*(median_days_of_months[i]+10)))) } #Now create a data.frame holding a monthly value of the optimal tilt angle #for each site tilt_angles_western_canada = data.frame(ncol = 12, nrow = nrow(western_canada_coordinates)) for(i in 1:nrow(western_canada_coordinates)) { for(j in 1:12) { tilt_angles_western_canada[i,j] = deg_to_rad(western_canada_coordinates[i,2]) + declinations_monthly[j] } }

Now with the monthly tilt angles, we can compute the tilted insolation/irradiance per site per month, this is called a "Plane of Array" (POA) irradiance, as discussed above. The code snippet below implements the straightforward equation I copied in the earlier part of this blog post. Note that we will leave out the consideration of "annual" values for now--for reasons that will eventually become obvious.

calculate_poa_monthly <- function(tilt_angles, ghi_values, dnr_values, diffuse_values, albedo_values) { poa_monthly_values = data.frame(matrix(nrow = nrow(tilt_angles), ncol = 12)) for(i in 1:nrow(tilt_angles)) { for(j in 1:12) { #Bring this out here to simplify the spaghetti below tilt_inner_part = cos(tilt_angles[i,j]) poa_part_1 = dnr_values[i,j]*tilt_inner_part poa_part_2 = ghi_values[i,j]*albedo_values[i,j]*0.5*(1- tilt_inner_part) poa_part_3 = diffuse_values[i,j] poa_monthly_values[i,j] = poa_part_1 + poa_part_2 + poa_part_3 } } return(poa_monthly_values) } #Execute this function with the data we have now western_canada_poa = calculate_poa_monthly(tilt_angles_western_canada, western_canada_ghi_monthly, western_canada_dnr_monthly, western_canada_diffuse_monthly, western_canada_albedo_monthly)

To avoid an ugly headache: keep in mind that we've already converted the units for the tilt angles from

At this point, let's say we wanted to just do something nice and pretty like the map from the last time. Just simply show the Level 2 districts in Alberta + British Columbia and then plot them, just like last time. In the code snippet below, I am going to repeat the same steps, more or less, like last time and the post a map showing a map of the POA insolation across the 47 Level 2 districts of Western Canada. In the section following that, we're going to go over some of the seasonal and regional trends of the POA across Alberta and British Columbia.

#Create the vector of annual values of the POA annual_poa_western_canada = rowSums(days_in_months*western_canada_poa) western_canada_annual_poa = data.frame(unique(western_canada_ellipsoid@data$NAME_2), annual_poa_western_canada) colnames(western_canada_annual_poa) = c("NAME_2", "Insolation") #This is where we got the projected maps of Alberta + BC western_canada_ellipsoid = canada_level2_ellipsoid[which(canada_level2_ellipsoid$NAME_1 == "Alberta" | canada_level2_ellipsoid$NAME_1 == "British Columbia"),] #Give each row an ID western_canada_ellipsoid@data$id = rownames(western_canada_ellipsoid@data) #Join the POA insolation data to the geographical data.frame western_canada_ellipsoid@data = join(western_canada_ellipsoid@data, western_canada_annual_poa, by = "NAME_2") #Now we create a data.frame which can be projected using ggplot2 #Note it has 661,178 rows! western_canada_fortified <- fortify(western_canada_ellipsoid) #...but we want to make sure that it has the correct data attached to it, showing the POA insolation western_canada_fortified <- join(western_canada_fortified, western_canada_ellipsoid@data, by = "id") the_plot <- ggplot() + geom_polygon(data = western_canada_fortified, aes(x = long, y = lat, group = group, fill = Insolation), color = "black", size = 0.15, show.legend = TRUE) + scale_fill_distiller(name="POA insolation, annual \n kWh per m^2", palette = "Spectral", breaks = pretty_breaks(n = 9))

...and this is what the map now looks like.

A couple of small things this time. Firstly, I created the map/plot as an object "the_plot" and then executed that through the Console. I'm not sure why, but there seems to be some kind of long-running bug with ggplot which is avoided by this facile trick. Secondly, this time I calculated the annual insolation by multiplying each row of the monthly POA data.frame by the number of days in a month and then summing. That bit should have been straightforward.

I find the above map aesthetically pleasing, but there's a limit to the kind of story you can tell with this sort of image. There are of course the same caveats from last time: the districts themselves are quite large, and pretending that the same insolation regimes and tilt angles are valid throughout a Level 2 district will have its limitations. On another note, this single "snapshot" of annual data tells us little about how albedo from snow impacts different parts of Western Canada.

Comments on Albedo and Insolation

So, quantitatively, how much does the consideration of albedo actually change things? Well, I'll make a data table comparing the GHI and POA insolations, on a yearly basis, for each of the sites. You could install the "data.table" package for this; if you'd prefer not to, then you could create any old data.frame but data tables do make things simpler down the road.

display_insolations = data.table(western_canada_district_names, western_canada_solar_insolation_annual, western_canada_annual_poa[,2], keep.rownames = FALSE) colnames(display_insolations) = c("District name", "GHI", "POA") #Print it out and have a look > display_insolations District name GHI POA 1: Medicine Hat 1343.20 1469.381 2: Lethbridge 1193.55 1393.421 3: Claresholm 1182.60 1391.090 4: Hanna 1153.40 1359.715 5: Strathmore 1149.75 1365.419 6: Calgary 1157.05 1383.741 7: Wainwright 1233.70 1421.541 8: Red Deer 1105.95 1286.419 9: Rocky Mountain House 1131.50 1301.670 10: Lloydminster 1135.15 1361.056 11: Edmonton 1168.00 1341.603 12: Cold Lake 1332.25 1463.725 13: White Court 1332.25 1474.018 14: Hinton 1266.55 1435.325 15: Canmore 1262.90 1439.510 16: Fort McMurray 1251.95 1435.881 17: Slave Lake 1222.75 1406.745 18: Grande Cache 1222.75 1409.017 19: Grande Prairie 1219.10 1410.113 20: Alberni-Clayoquot 1197.20 1469.776 21: Bulkley-Nechako 1171.65 1363.674 22: Capital 1241.00 1484.624 23: Cariboo 1237.35 1411.877 24: Central Coast 1138.80 1413.606 25: Central Kootenay 1324.95 1467.803 26: Central Okanagan 1332.25 1466.766 27: Columbia-Shuswap 1233.70 1432.005 28: Comox-Strathcona 1208.15 1451.819 29: Cowichan Valley 1204.50 1477.752 30: East Kootenay 1361.45 1461.900 31: Fraser-Fort George 1131.50 1372.002 32: Fraser Valley 1273.85 1465.747 33: Greater Vancouver 1259.25 1471.242 34: Kitimat-Stikine 1040.25 1332.545 35: Kootenay Boundary 1332.25 1474.285 36: Mount Waddington 1109.60 1436.950 37: Nanaimo 1197.20 1476.098 38: North Okanagan 1270.20 1456.289 39: Northern Rockies 1087.70 1267.006 40: Okanagan-Similkameen 1273.85 1471.606 41: Peace River 1138.80 1318.155 42: Powell River 1208.15 1450.495 43: Skeena-Queen Charlotte 1058.50 1375.758 44: Squamish-Lillooet 1270.20 1447.448 45: Stikine 1062.15 1272.082 46: Sunshine Coast 1259.25 1460.586 47: Thompson-Nicola 1270.20 1436.779

Is there a difference across the districts in how much albedo adds to the insolation and, if so, does that happen at specific times of year?

If one defines the proportion of ground reflected insolation to total (POA) insolation, then we can simply plot that against months of the year. For our dataset, the proportion of ground reflected insolation to the total POA insolation varies from 0.44% to 9.5%; but both of these values are hit in the same location, in the "Northern Rockies" district of British Columbia (longitude and latitude of the centroid: 123.44 West and 59.07 North). These points occur at December and June, respectively; clearly, the GHI component is the major defining feature of this insolation.

In general, this is equally true for both Alberta and British Columbia; the months during which ground-reflected radiation accounts for the highest possible proportion of the total POA insolation are determined by the GHI. This is shown in the next picture and detailed in the following code snippet.

We arrived here through:

ground_reflected_insolation = data.frame(matrix(nrow = nrow(western_canada_coordinates), ncol = 12)) for(i in 1:47) { for(j in 1:12) { ground_reflected_insolation[i,j] = western_canada_albedo_monthly[i,j]*western_canada_ghi_monthly[i,j]*(0.5*(1-cos(tilt_angles_western_canada[i,j]))) } } ground_reflected_to_poa = data.frame(matrix(nrow = nrow(western_canada_coordinates), ncol = 12)) ground_reflected_to_ghi = data.frame(matrix(nrow = nrow(western_canada_coordinates), ncol = 12)) for(i in 1:47) { for(j in 1:12) { ground_reflected_to_poa[i,j] = ground_reflected_insolation[i,j]/western_canada_poa[i,j] ground_reflected_to_ghi[i,j] = ground_reflected_insolation[i,j]/western_canada_ghi_monthly[i,j] } } for(i in 1:47) { for(j in 1:12) { if(ground_reflected_to_poa[i,j] == min(ground_reflected_to_poa)) { print(paste(i,j, sep = ", ")) } } } for(j in 1:12) { for(i in 1:47) { if(ground_reflected_to_poa[i,j] == max(ground_reflected_to_poa[,j])) { print(paste(i,j, sep = ", ")) print(western_canada_district_names[i]) } } } ground_to_poa_bc = vector(length = 12) ground_to_poa_ab = vector(length = 12) ground_to_ghi_ab = vector(length = 12) ground_to_ghi_bc = vector(length = 12) for(j in 1:12) { ground_to_poa_ab[j] = mean(ground_reflected_to_poa[1:19,j]) ground_to_poa_bc[j] = mean(ground_reflected_to_poa[20:47, j]) ground_to_ghi_ab[j] = mean(ground_reflected_to_ghi[1:19, j]) ground_to_ghi_bc[j] = mean(ground_reflected_to_ghi[20:47, j]) } plot(ground_to_ghi_ab, type = "b", pch = 12, col = "red", ylab = "", xlab = "Month") lines(ground_to_ghi_bc, type = "b", pch = 12, col = "green") legend(2, 0.06, legend = c("Alberta", "BC"), col = c("red", "green"), pch = 12) title(main = "Ground reflected insolation as a proportion of POA \n")

Some Closing Remarks

So I started out hoping to "prove" that snow cover in some Alberta districts would more or less compensate for the reduced GHI during in the winter months. While that does not seem to be the case--ie, I was wrong--I think there is still space to dig a bit deeper in the next blog post, where I will also examine questions such as length of daylight hours, temperatures and how these correlate to give us not only insolation/irradiance but also PV yield, measured in kWh. I also stopped short of doing a regression analysis of insolation as a function of latitude as I did not think it would be helpful in this limited case of Alberta and BC, although there is some evidence that looking at PV yield as a function of elevation/altitude is a separate but good idea. Finally, some people who read this will probably be frustrated that I have used insolation and irradiance more or less interchangably. Please note that I have used "insolation" to refer to an amount of energy radiating downwards and measured in kWh (less often Wh) or Joules (kJ, MJ, etc). Where I did not think it would make too much of a difference however, I did not mind using "irradiance" or simply "radiation" although, of course, that will not do in the blog post coming up.

Sunday, 24 January 2021

Making a Solar Insolation Map for Alberta (For novices!)

Been a while since I've blogged here; wrapping up an MSc and moving continents from Europe to North America is all the excuse I need. This blog post is not going to be revolutionary, and obviously it builds on a lot of what others have done before (see towards the end) but I hope enough people find it fun and perhaps informative.

So I've bitten the bullet and emigrated to Edmonton, Alberta (that's the Canadian province just to the east of British Columbia, and to the west of Saskatchewan--north of Idaho, where they grow the potatoes, if you like). I've been pleasantly surprised by the number of individuals I've met with a positive outlook on renewables--this is a bit of a relief, when we landed in Canada and quarantined in Calgary, it felt like I was being bombarded with all sorts of messaging which was pro-oil and gas (read: climate denialist). In fact, there's some data to indicate that the anti-renewables crowd are not representative of Alberta--the "Saudi Arabia of Canada"-- at all. Institutionally, I've also enjoyed reading the output from the Pembina Institute, which seems to specialise in "blue skies optimism" backed up by solid research.

For those who don't know: the cold temperatures and high levels of solar insolation are a perfect mix for solar PV panels. I was particularly drawn to these heat maps drawn up by Pembina's Binnu Jeyakumar: https://www.pembina.org/blog/unlocking-renewables-will-unlock-jobs-alberta At the time I first saw them late last year, I was stuck in finishing my thesis, but now that I've got the time, I'm going to try and replicate one of these maps in R. When finished, I will have drawn a heat map in R which illustrates the differences in solar insolation at "Census Division" level across the Province of Alberta.

When we're done, this is what the map of Alberta is going to look likeLet's start at the beginning. In general, countries have multiple levels of provincial government. For example, any neighborhood in Canada is going to have three layers/levels of government: a Federal government, based in Ottawa, Level 0; a pronvincial government, let's say Alberta, which is Level 1; and a municipal government, with a town council and the like. Let's say we start with Edmonton, which in this case is going to be Level 2. What does a map of Canada look like at that level of granularity? First, download the data from GADM as follows. I also download Level 1 and Level 2 :

library(rgeos)

library(maptools)

library(ggmap)

library(ggplot2)

library(sp)

library(rgdal)

library(raster) #Various packages for GIS and mapping

library(plyr)

#This one is for database manipulations like "join"

library(nasapower)

#To get the solar insolation and other meteorological data

library(scales)

#This one allows us to do things like have nice scales for the legends on the images we create

#Download the files for Levels 1 and 2 SPDFs covering Canada

can_level1 = getData("GADM", country = "CA", level = 1)

can_level2 = getData("GADM", country = "CA", level =2)

plot(can_level1, col = "pink", bg = "lightblue")

> names(can_level1) [1] "GID_0" "NAME_0" "GID_1" "NAME_1" "VARNAME_1" "NL_NAME_1" "TYPE_1" [8] "ENGTYPE_1" "CC_1" "HASC_1"

> can_level1$NAME_1 [1] "Alberta" "British Columbia" "Manitoba" [4] "New Brunswick" "Newfoundland and Labrador" "Northwest Territories" [7] "Nova Scotia" "Nunavut" "Ontario" [10] "Prince Edward Island" "Québec" "Saskatchewan" [13] "Yukon"

> alberta_level1 = can_level1[which(can_level1$NAME_1 == "Alberta"),]

> plot(alberta_level1, col = "green", add = TRUE)

WGS84 <- CRS("+proj=longlat +ellps=WGS84 +datum=WGS84 +no_defs") canada_level2_ellipsoid = spTransform(can_level2, WGS84)

alberta_ellipsoid = canada_level2_ellipsoid[which(canada_level2_ellipsoid$NAME_1 == "Alberta"),]

alberta_coordinates = data.frame(matrix(ncol = 2, nrow = length(alberta_ellipsoid$NAME_2))) for(i in 1:length(alberta_ellipsoid$NAME_2)) { alberta_coordinates[i,] = data.frame(gCentroid(alberta_ellipsoid[i,])) }

ghi_retrieved = nasapower::get_power(community = "SSE", pars = "ALLSKY_SFC_SW_DWN", lonlat = c(alberta_coordinates[1,1], alberta_coordinates[1,2]), temporal_average = "CLIMATOLOGY")

Here, "ALLSKY_SFC_SW_DWN" retrieves the GHI or "Global Horizontal Irradiation; and "Climatology" defines the inbuilt timeframe from 1-1-1984 to 31-12-2013. One important thing you'll notice is that the average daily insolation on a GHI basis is stored in column 16 of the returned data.frame (actually a tibble in the first instance from the above line). So we will build an all-Alberta data frame containing the average yearly insolation during the "CLIMATOLOGY" timeframe thus:

alberta_solar_insolation_annual = data.frame(matrix(ncol = 1, nrow = nrow(alberta_coordinates))) for(i in 1:nrow(alberta_coordinates)) { ghi_retrieved = nasapower::get_power(community = "SSE", pars = "ALLSKY_SFC_SW_DWN", lonlat = c(alberta_coordinates[i,1], alberta_coordinates[i,2]), temporal_average = "CLIMATOLOGY") alberta_solar_insolation_annual[i,] = data.frame(ghi_retrieved[16])*365 print("One more Census Division") }

alberta_solar_insolation_annual = cbind(unique(alberta_ellipsoid@data$NAME_2), alberta_solar_insolation_annual) #We set also the metadata colnames(alberta_solar_insolation_annual) = c("NAME_2", "Insolation")

alberta_ellipsoid@data

names(alberta_ellipsoid@data) [1] "GID_0" "NAME_0" "GID_1" "NAME_1" "NL_NAME_1" "GID_2" "NAME_2" "VARNAME_2" [9] "NL_NAME_2" "TYPE_2" "ENGTYPE_2" "CC_2" "HASC_2" "GID_0.1" "NAME_0.1" "GID_1.1" [17] "NAME_1.1" "NL_NAME_1.1" "GID_2.1" "VARNAME_2.1" "NL_NAME_2.1" "TYPE_2.1" "ENGTYPE_2.1" "CC_2.1" [25] "HASC_2.1"

alberta_ellipsoid@data = join(alberta_ellipsoid@data, alberta_solar_df, by = "NAME_2") alberta_solar_df_fortified_B = fortify(alberta_ellipsoid)

ggplot() + geom_polygon(data = alberta_solar_df_fortified_B, aes(x = long, y = lat, group = group, fill = Insolation), size = 0.12) + scale_fill_distiller(name="Insolation, annual \n kWh per m^2", palette = "Spectral", breaks = 7)

This looks pretty and all, but it's also not entirely conclusive of anything if it's not put into perspective. So I repeated the steps above, but instead of Level 2 districts in Alberta alone, I mapped them for the two provinces of Western Canada: Alberta and British Columbia, the latter of which is to the west (left) of the image. As can be clearly seen, Alberta is pretty much consistently more sunny than British Columbia.

Coming up in future posts, I will move on to try and map not only insolation but also more decision variable type values, such as PV yield. A yet more sophisticated approach involves also the intermittency of solar PV and how it matches, or doesn't match, electricity demand (i.e., without storage, PV electricity is no good if people only play video games and turn the lights on at night). That's for much later down the road! To see a similar example which I relied on quite a bit in this case, see here about making a country level map with ggplot2: https://rpubs.com/spoonerf/countrymapggplot2